How to use nn.Embedding (pytorch) |

|||||||

・pip ・MeCab ・class ・pickle ・read/readline ・asfarray ・digitize ・expit ・linalg.solve ・meshgrid ・mgrid ・ndmin ・pad ・poly1d ・polyfit ・prod ・shape ・figure ・pcolormesh ・scatter ・BCELoss, MSELoss ・device ・Embedding ・TensorDataset, Dataloader ・RNN, LSTM ・SVC ・GaussianNB ・interpolate ・postscript ・image display ・frame, grid ・Crop Image ・linear interpolation ・Hysteresis switch ・Square/Triangle wave ・CartPole-v0 ・1 of K Coding |

・In Japanese

■Description of Embedding function

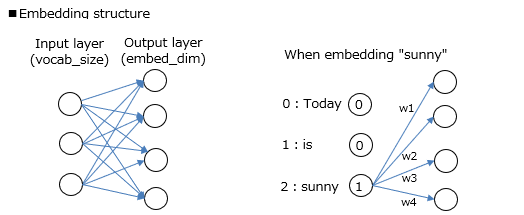

Vectorize the words that make up a sentence. This is called word embedding.

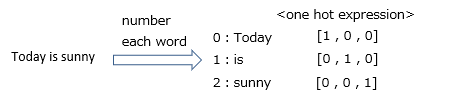

For example, when embedding a word in the sentence "Today is sunny", each word is broken down and given an ID as shown below, and expressed as one hot. ■Concrete example of embedding function

The initial values of the perceptron parameters are random values. The parameter values are updated by learning.

import torch

|

|

|||||