What is the Softmax function |

|||||||

・ε-greedy method ・Reinforcement learning ・Temporal Difference learning ・Regression vs Classification vs Clustering ・Parametric vs Nonparametric model ・LSTM ・Error function ・Experience Replay ・Gradient Method ・Softmax ・Gaussian Naive Bayes ・MNIST Dataset ・Sigmoid function |

・In Japanese

■What is the softmax function

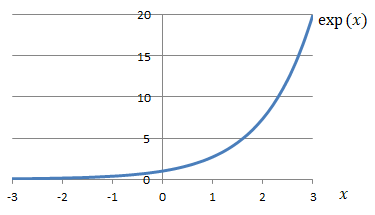

The softmax function is one of the activation functions commonly used in neural networks, and it normalizes the function so that the sum of its values is 1, as shown below.

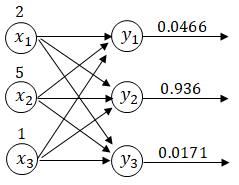

As a concrete example, the calculation result of y when x is given is shown below. ■Difference between ratio calculation and softmax function

If we normalize, the calculation method below should be fine, but what is the advantage of using the softmax function? ■Differentiation of the softmax function

Below we use the derivative of a rational function.

|

|

|||||